Use PSO to find minimum in OpenCASCADE

eryar@163.com

Abstract. Starting from OCCT6.8.0 will include one more algorithm for solving global optimization problems. Its development has been triggered by insufficient performance and robustness of existing algorithm of minimization of curve-surface distance in Extrema package. The PSO, Algorithms in this family are stochastic, and this feature can be perceived as opposite to robustness. However, we found it was not only much faster than original deterministic one, but also more robust in complex real-world situations. In particular, it has been able to find solution in situations like tangential or degenerated geometries where deterministic algorithms work poor and require extensive oversampling for robust results. The paper mainly focus on the usage and applications of the PSO algorithm.

Key Words. PSO, Particle Swarm Optimization, Minimization

1.Introduction

粒子群優化(Particle Swarm Optimization, PSO)算法是Kennedy和Eberhart受人工生命研究結果的啟發、通過模擬鳥群覓食過程中的遷徙和群聚行為而提出的一種基于群體智能的全局隨機搜索算法,自然界中各種生物體均具有一定的群體行為,而人工生命的主要研究領域之一是探索自然界生物的群體行為,從而在計算機上構建其群體模型。自然界中的鳥群和魚群的群體行為一直是科學家的研究興趣,生物學家Craig Reynolds在1987年提出了一個非常有影響的鳥群聚集模型,在他的仿真中,每一個個體遵循:

(1) 避免與鄰域個體相沖撞;

(2) 匹配鄰域個體的速度;

(3) 飛向鳥群中心,且整個群體飛向目標。

仿真中僅利用上面三條簡單的規則,就可以非常接近的模擬出鳥群飛行的現象。1995年,美國社會心理學家James Kennedy和電氣工程師Russell Eberhart共同提出了粒子群算法,其基本思想是受對鳥類群體行為進行建模與仿真的研究結果的啟發。他們的模型和仿真算法主要對Frank Heppner的模型進行了修正,以使粒子飛向解空間并在最好解處降落。Kennedy在他的書中描述了粒子群算法思想的起源。

粒子群優化由于其算法簡單,易于實現,無需梯度信息,參數少等特點在連續優化問題和離散問題中都表現出良好的效果,特別是因為其天然的實數編碼特點適合處理實優化問題。近年來成為國際上智能優化領域研究的熱點。PSO算法最早應用于非線性連續函數的優化和神經元網絡的訓練,后來也被用于解決約束優化問題、多目標優化問題,動態優化問題等。

在OpenCASCADE中很多問題可以歸結為非線性連續函數的優化問題,如求極值的包中計算曲線和曲面之間的極值點,或曲線與曲線間的極值點等問題。本文主要關注OpenCASCADE中PSO的用法,在理解其用法的基礎上再來理解其他相關的應用。

2. PSO Usage

為了提高程序的性能及穩定性,OpenCASCADE引入了智能化的算法PSO(Particle Swarm Optimization),相關的類為math_PSO,其類聲明的代碼如下:

//! In this class implemented variation of Particle Swarm Optimization (PSO) method.

//! A. Ismael F. Vaz, L. N. Vicente

//! "A particle swarm pattern search method for bound constrained global optimization"

//!

//! Algorithm description:

//! Init Section:

//! At start of computation a number of "particles" are placed in the search space.

//! Each particle is assigned a random velocity.

//!

//! Computational loop:

//! The particles are moved in cycle, simulating some "social" behavior, so that new position of

//! a particle on each step depends not only on its velocity and previous path, but also on the

//! position of the best particle in the pool and best obtained position for current particle.

//! The velocity of the particles is decreased on each step, so that convergence is guaranteed.

//!

//! Algorithm output:

//! Best point in param space (position of the best particle) and value of objective function.

//!

//! Pros:

//! One of the fastest algorithms.

//! Work over functions with a lot local extremums.

//! Does not require calculation of derivatives of the functional.

//!

//! Cons:

//! Convergence to global minimum not proved, which is a typical drawback for all stochastic algorithms.

//! The result depends on random number generator.

//!

//! Warning: PSO is effective to walk into optimum surrounding, not to get strict optimum.

//! Run local optimization from pso output point.

//! Warning: In PSO used fixed seed in RNG, so results are reproducible.

class math_PSO

{

public:

/**

* Constructor.

*

* @param theFunc defines the objective function. It should exist during all lifetime of class instance.

* @param theLowBorder defines lower border of search space.

* @param theUppBorder defines upper border of search space.

* @param theSteps defines steps of regular grid, used for particle generation.

This parameter used to define stop condition (TerminalVelocity).

* @param theNbParticles defines number of particles.

* @param theNbIter defines maximum number of iterations.

*/

Standard_EXPORT math_PSO(math_MultipleVarFunction* theFunc,

const math_Vector& theLowBorder,

const math_Vector& theUppBorder,

const math_Vector& theSteps,

const Standard_Integer theNbParticles = 32,

const Standard_Integer theNbIter = 100);

//! Perform computations, particles array is constructed inside of this function.

Standard_EXPORT void Perform(const math_Vector& theSteps,

Standard_Real& theValue,

math_Vector& theOutPnt,

const Standard_Integer theNbIter = 100);

//! Perform computations with given particles array.

Standard_EXPORT void Perform(math_PSOParticlesPool& theParticles,

Standard_Integer theNbParticles,

Standard_Real& theValue,

math_Vector& theOutPnt,

const Standard_Integer theNbIter = 100);

private:

void performPSOWithGivenParticles(math_PSOParticlesPool& theParticles,

Standard_Integer theNbParticles,

Standard_Real& theValue,

math_Vector& theOutPnt,

const Standard_Integer theNbIter = 100);

math_MultipleVarFunction *myFunc;

math_Vector myLowBorder; // Lower border.

math_Vector myUppBorder; // Upper border.

math_Vector mySteps; // steps used in PSO algorithm.

Standard_Integer myN; // Dimension count.

Standard_Integer myNbParticles; // Particles number.

Standard_Integer myNbIter;

};

math_PSO的輸入主要為:求極小值的多元函數math_MultipleVarFunction,各個自變量的取值范圍。下面通過一個具體的例子來說明如何將數學問題轉換成代碼,利用程序來求解。在《最優化方法》中找到如下例題:

實現代碼如下所示:

/*

Copyright(C) 2017 Shing Liu(eryar@163.com)

Permission is hereby granted, free of charge, to any person obtaining a copy

of this software and associated documentation files(the "Software"), to deal

in the Software without restriction, including without limitation the rights

to use, copy, modify, merge, publish, distribute, sublicense, and / or sell

copies of the Software, and to permit persons to whom the Software is

furnished to do so, subject to the following conditions :

The above copyright notice and this permission notice shall be included in all

copies or substantial portions of the Software.

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT.IN NO EVENT SHALL THE

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

SOFTWARE.

*/

// NOTE

// ----

// Tool: Visual Studio 2013 & OpenCASCADE7.1.0

// Date: 2017-04-18 20:52

#include <math_PSO.hxx>

#pragma comment(lib, "TKernel.lib")

#pragma comment(lib, "TKMath.lib")

// define function:

// f(x) = x1 - x2 + 2x1^2 + 2x1x2 + x2^2

class TestFunction : public math_MultipleVarFunction

{

public:

virtual Standard_Integer NbVariables() const

{

return 2;

}

virtual Standard_Boolean Value(const math_Vector& X, Standard_Real& F)

{

F = X(1) - X(2) + 2.0 * X(1) * X(1) + 2.0 * X(1) * X(2) + X(2) * X(2);

return Standard_True;

}

};

void test()

{

TestFunction aFunction;

math_Vector aLowerBorder(1, aFunction.NbVariables());

math_Vector aUpperBorder(1, aFunction.NbVariables());

math_Vector aSteps(1, aFunction.NbVariables());

aLowerBorder(1) = -10.0;

aLowerBorder(2) = -10.0;

aUpperBorder(1) = 10.0;

aUpperBorder(2) = 10.0;

aSteps(1) = 0.1;

aSteps(2) = 0.1;

Standard_Real aValue = 0.0;

math_Vector aOutput(1, aFunction.NbVariables());

math_PSO aPso(&aFunction, aLowerBorder, aUpperBorder, aSteps);

aPso.Perform(aSteps, aValue, aOutput);

std::cout << "Minimum value: " << aValue << " at (" << aOutput(1) << ", " << aOutput(2) << ")" << std::endl;

}

int main(int argc, char* argv[])

{

test();

return 0;

}

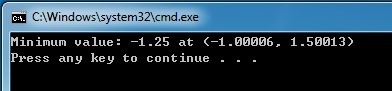

計算結果為最小值-1.25, 在x1=-1,x2=1.50013處取得,如下圖所示:

3.Applications

計算曲線和曲面之間的極值,也是一個計算多元函數的極值問題。對應的類為Extrema_GenExtCS。

4.Conclusion

常見的優化算法如共軛梯度法、Netwon-Raphson法(math_NewtonMinimum),Fletcher-Reeves法(math_FRPR)、Broyden-Fletcher-Glodfarb-Shanno法(math_BFGS)等都是局部優化方法,所有這些局部優化方法都是針對無約束優化問題提出的,而且對目標函數均有一定的解析性要求,如Newton-Raphson要求目標函數連續可微,同時要求其一階導數連續。OpenCASCADE中的math_NewtonMinimu中還要求多元函數具有Hessian矩陣,即二階導數連續。

隨著現科學技術的不斷發展和多學科的相互交叉與滲透,很多實際工程優化問題不僅需要大量的復雜科學計算,而且對算法的實時性要求也特別高,這些復雜優化問題通常具有以下特點:

1) 優化問題的對象涉及很多因素,導致優化問題的目標函數的自變量維數很多,通常達到數百維甚至上萬維,使得求解問題的計算量大大增加;

2) 優化問題本身的復雜性導致目標函數是非線性,同時由于有些目標函數具有不可導,不連續、極端情況下甚至函數本身不能解析的表達;如曲線或曲面退化導致的尖點或退化點的不連續情況;

3) 目標函數在定義域內具有多個甚至無數個極值點,函數的解空間形狀復雜。

當以上三個因素中一個或幾個出現在優化問題中時,將會極大地增加優化問題求解的困難程度,單純地基于解析確定性的優化方法很難奏效,因此必須結合其他方法來解決這些問題。

PSO算法簡單,易于實現且不需要目標函數的梯度(一階可導)和Hassian矩陣(二階可導),速度快,性能高。但是PSO也有不足之處,這是許多智能算法的共性,即只能找到滿足條件的解,這個解不能確定為精確解。

5.References

1. aml. Application of stohastic algorithms in extrema. https://dev.opencascade.org/index.php?q=node/988

2. 何堅勇. 最優化方法. 清華大學出版社. 2007

3. 沈顯君. 自適應粒子群優化算法及其應用. 清華大學出版社. 2015

4. 汪定偉, 王俊偉, 王洪峰, 張瑞友, 郭哲. 智能優化方法. 高等教育出版社. 2007