http://en.wikipedia.org/wiki/Subgradient_methodClassical subgradient rules

Let  be a convex function with domain

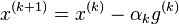

be a convex function with domain  . A classical subgradient method iterates

. A classical subgradient method iterates

where  denotes a subgradient of

denotes a subgradient of  at

at  . If

. If  is differentiable, then its only subgradient is the gradient vector

is differentiable, then its only subgradient is the gradient vector  itself. It may happen that

itself. It may happen that  is not a descent direction for

is not a descent direction for  at

at  . We therefore maintain a list

. We therefore maintain a list  that keeps track of the lowest objective function value found so far, i.e.

that keeps track of the lowest objective function value found so far, i.e.

下圖來自: http://www.stanford.edu/class/ee364b/notes/subgradients_notes.pdf

例2:SVM代價函數是hinge loss,在(1,0)除導數不存在,取1和1之間的數值,具體怎么取?Mingming Gong said好像這個pdf和http://www.stanford.edu/class/ee364b/lectures/subgrad_method_slides.pdf,其中一個講了。Mingming Gong asked tianyi, which is better, subgradient or smooth apprpximation?結論是不一定,subgradient解的是原問題,smooth不是解的原問題。一個相當于對梯度的近似,一個是對函數的近似,很難說哪個好。